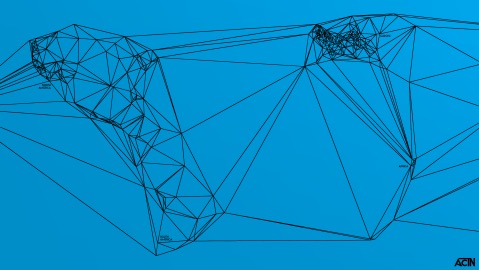

Ceph CRUSH two copies in one rack

Quick CRUSH example on how to store 3 replicas, two in rack number 1 and the third one in rack number 2.

Quick CRUSH example on how to store 3 replicas, two in rack number 1 and the third one in rack number 2.

Sometimes removing OSD, if not done properly can result in double rebalancing. The best practice to remove an OSD involves changing the crush weight to 0.0 as first step.

Ceph just moved outside of DevStack in order to comply with the new DevStack’s plugin policy. The code can be found on github. We now have the chance to be on OpenStack Gerrit as well and thus brings all the good things from the OpenStack infra (a CI).

To use it simply create a localrc file with the following:

enable_plugin ceph https://github.com/openstack/devstack-plugin-ceph

A more complete

localrcfile can be found on Github.

When you manage a large cluster, you do not always know where your OSD are located.

Sometimes you have issues with PG such as unclean or with OSDs such as slow requests.

While looking at your ceph health detail you only see where the PGs are acting or on which OSD you have slow requests.

Given that you might have tons of OSDs located on a lot of node, it is not straightforward to find and restart them.

Infernalis has just been released a couple of weeks ago and I have to admit that I am really impressed of the work that has been done. So I am going to present you 5 really handy things that came out with this new release.

Quick tip to release the memory that tcmalloc has allocated but which is not being used by the Ceph daemon itself.

This article simply relays some recent discovery made around Ceph performance. The finding behind this story is one of the biggest improvement in Ceph performance that has been seen in years. So I will just highlight and summarize the study in case you do not want to read it entirely.

Quick and simple test to validate if the RBD cache is enabled on your client.

Quick tip to determine the location of a file stored on CephFS.

Using SSD drives in some part of your cluster might useful. Specially under read oriented workloads. Ceph has a mechanism called primary affinity, which allows you to put a higher affinity on your OSDs so they will likely be primary on some PGs. The idea is to have reads served by the SSDs so clients can get faster reads.