MySQL-Galera cluster with HAproxy

When I started working on Open Stack, I had to investigate about the HA of the nova component. Unfortunatly the nova configuration needed a single entry point to connect to the MySQL database. The solution that came to me was to use HAProxy on top of my existing Galera cluster.

I. Introduction

Reminder: I will use the configuration of my previous article: MySQL multi-master réplication with Galera, the architecture works perfectly and the communications are established using SSL. But there were some issues:

- There was no load-balancing, the application only use one node and Galera replicates the data accross the cluster

- If the node where the application commit goes down, no more replication

The main goal was:

Setup a new feature which will provide load-balancing functionnality and access to my database through a single entry point by using a virtual IP address.

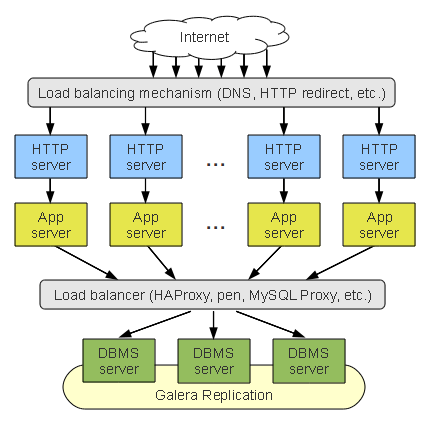

You can use some load-balancers for this purpose:

- Pen: a tiny TCP load-balancer

- GLB: Galera load-balancer, mainly based on Pen and fix missing functionnality to Pen

- MySQL Proxy: the one provides by MySQL

- HAProxy: THE load-balancer

Here a use case:

II. Setup

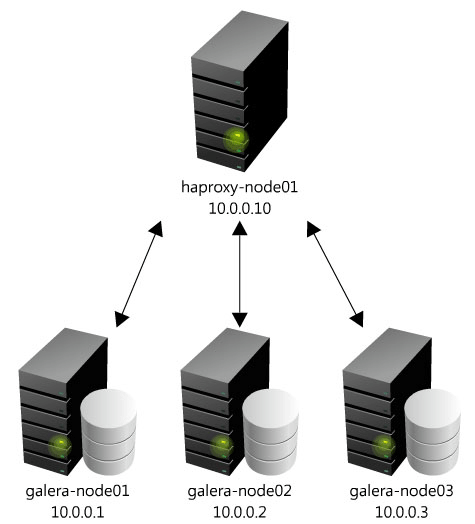

I will re-use my last Galera configuration, here the architecture I set up:

II.1. Network interfaces

NIC configuration, /etc/network/interfaces:

# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto eth0

iface eth0 inet dhcp

# The secondary network interface

auto eth1

iface eth1 inet static

address 10.0.0.10

netmask 255.0.0.0

Local name resolution, /etc/hosts:

127.0.0.1 localhost

127.0.1.1 haproxy-node01

10.0.0.2 galera-node02

10.0.0.1 galera-node01

10.0.0.3 galera-node03

10.0.0.10 haproxy-node01

10.0.0.11 haproxy-node02

II.2. HAProxy

Install it:

ubuntu@haproxy-node01:~$ sudo apt-get install haproxy -y |

We allow the INIT script to launch HAProxy:

ubuntu@haproxy-node01:~$ sudo sed -i s/0/1/ /etc/default/haproxy |

HAProxy configuration from scratch:

# this config needs haproxy-1.1.28 or haproxy-1.2.1

global

log 127.0.0.1 local0

log 127.0.0.1 local1 notice

#log loghost local0 info

maxconn 1024

#chroot /usr/share/haproxy

user haproxy

group haproxy

daemon

#debug

#quiet

defaults

log global

mode http

option tcplog

option dontlognull

retries 3

option redispatch

maxconn 1024

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

listen galera_cluster

bind 10.0.0.100:3306

mode tcp

option httpchk

balance leastconn

server galera-node01 10.0.0.1:3306 check port 9200

server galera-node02 10.0.0.2:3306 check port 9200

server galera-node03 10.0.0.3:3306 check port 9200

Main options details:

mode tcp: default mode, HAProxy will work at TCP level, a full-duplex connection will be established between the client and the server. Two others options are available:httpandhealthbalance roundrobin: defines the load-balancing algorythm to use. Round-robin is a loop system, if you have 2 servers, the failover will be something like this: 1,2,1,2option tcpka: enable thekeepalivealso know aspipelining

We run HAProxy:

ubuntu@haproxy-node01:~$ sudo service haproxy start |

Does HAProxy work?

ubuntu@haproxy-node01:~$ sudo netstat -plantu | grep 3306 |

Round-robin effects:

ubuntu@haproxy-node01:~$ mysql -uroot -proot -h127.0.0.1 -e "show variables like 'wsrep_node_name' ;" |

II.3. Galera cluster check

Install a local service on the Galera nodes:

$ sudo apt-get install xinetd -y |

III. Bonus scripts

III.1. HAProxy Hot Reconfiguration

Extract from the HAProxy configuration guide.

|

This setup is interesting but not ready for production. Indeed, putting an HAProxy node on top created a huge SPOF. In the next article, I will setup a failover active/passive cluster with Pacemaker.

III.2 Init script GLB

First download it from here and install it :)

Init script for the Galera Load Balancer daemon.

|

Many thanks to Daniel Bonekeeper for the share :)

Comments