Multi MySQL instances with Galera

No! This article is not about Ben-Hur or some other ancient Rome’s history facts. It’s yet another article about Galera, I don’t introduce Galera anymore. This how-to will help you to run multiple MySQL instance replicated thanks to Galera.

I. Principle

MySQL Multi?

This term stands for running multiple MySQL instances on the same server. Each instance will be completely isolated to the others. Indeed every running instance will have some dedicated stuff:

- User, running the MySQL process

- Socket

- Port: like 3306

- Directory: contains all the MySQL environment owned by the instance (database, bin-log, etc…)

For further information visit the MySQL official documentation.

In this article I assume that Galera is already installed. If you don’t know how to setup Galera, check my article about it. First make sure that you can run MySQL on both node with Galera replication fully functionnal. Since you validate that, we can start to work on MySQL multi.

What do you need?

- An existing Galera setup

- The mysqld_multi script. This script seeks for a [mysqld] section in

my.cnfand executes the instance according to the number used as argument. Example: [mysqld1] - The INIT script for mysqld_multi

- A good knowledge of Galera, I highly suggest you to read my previous article about Galera

II. Setup

II.1. Requirements installation

Install the mysql multi management script (if not already present on your system):

$ sudo wget https://raw.github.com/leseb/Scripts/master/mysqld_multi.sh -O /usr/bin/mysql_multi |

Install the INIT script:

$ sudo wget https://raw.github.com/leseb/Scripts/master/mysqld_multi_init.sh -O /etc/init.d/mysql_multi |

II.2. MySQL multi and Galera

II.2.1. MySQL Multi

Here we are!

The use case designed here is the following: you have multiple customers and you provide a database shared storage (as a service or not). You need a complete isolation between all the instances. For use case purpose we setup 2 instances. Each instances will be one customer.

First create a directory for mysqld_multi, this directory will store the logs of the management script:

$ sudo mkdir -p /mysql/mysqld_multi/ |

Use case customer 01:

$ sudo mkdir -p /mysql/customer01/{data,innodb,innodb_log,binlog,relaylog,log,tmp,socket} |

Use case customer 02:

$ sudo mkdir -p /mysql/customer02/{data,innodb,innodb_log,binlog,relaylog,log,tmp,socket} |

Now create the MySQL environment for each instances. mysql_install_db initializes the MySQL data directory and creates the system tables that it contains, if they do not exist.

$ sudo mysql_install_db --user=customer01 --datadir=/mysql/customer01/data |

W ATTENTION: those actions need to be performed on EVERY nodes.

II.2.2. The Galera trick

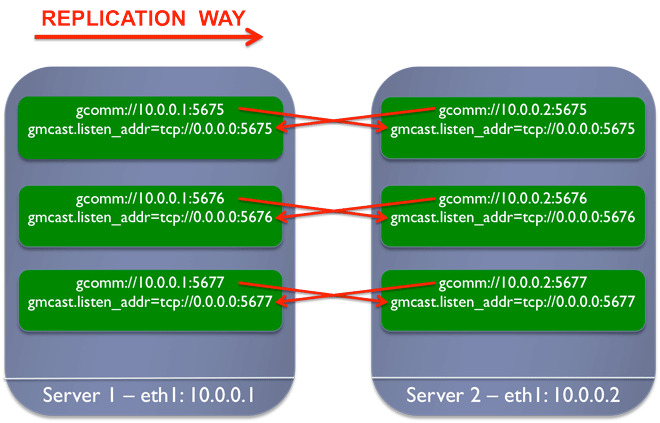

Since we use Galera, you have to completely understand how the replication works internally, rather the gcomm:// URL purpose. It’s already explained in my first article about Galera. But here is a little reminder:

- wsrep_cluster_address: Address to connect to cluster. For example

gcomm://10.0.0.1:5675. - wsrep_provider_options=”gmcast.listen_addr=tcp://0.0.0.0:5675”. Address at which Galera listens to connections from other nodes

During your first setup, your first node needs to use this gcomm://, since it’s the beginning of the setup there is no member to connect with. The first node will act as the donnor for the next once. This is why the other node will use the type of URL: gcomm://donnor-ip address.

Since we run multiple instance, there is as many replication as the number of of instance. Each instance will listen to connection from other nodes with the gmcast.listen_addr parameter.

Picture speaks louder than workds:

W NEVER EVER KEEP THE GCOMM URL LIKE THIS: gcomm://0.0.0.0. This address becomes ‘useless’ when the node is connected to the cluster. This is the ‘first shot’ to connect to your cluster after that evert node will be connected to each others. If you let this address unchanged your server will attempt to create/declare a new cluster at every restart.

W AFTER YOU SETUP HAS 2 MEMBERS CHANGE THIS URL WITH THE ADDRESS OF ONE OF THE MEMBER OF YOUR CLUSTER.

II.3. Server 01 configuration

Now make sure those directories are empty:

/etc/mysql//etc/mysql/conf.d/

And create this configuration file: /etc/mysql/my.cnf and fulfill it with the following content:

[mysqld_multi]

mysqld = /usr/sbin/mysqld

mysqladmin = /usr/bin/mysqladmin

user = mysql_super_user

password = mysql_super_user_secure_password

log = /mysql/mysqld_multi/mysqld_multi.log

[mysqld1]

bind-address = 0.0.0.0

socket = /mysql/customer01/socket/customer01.pid

port = 3307

pid-file = /mysql/customer01/customer01.pid

datadir = /mysql/customer01/data

user = customer01

tmpdir = /mysql/customer01/tmp

log-bin = /mysql/customer01/binlog/binlog

log-bin-index = /mysql/customer01/binlog/binlog.index

log-error = /mysql/customer01/log/mysql.err

general_log_file = /mysql/customer01/log/mysql.log

general_log = 1

wsrep_provider=/usr/lib/galera/libgalera_smm.so

wsrep_cluster_name="my_wsrep_cluster"

wsrep_cluster_address="gcomm://0.0.0.0:5674"

wsrep_provider_options="gmcast.listen_addr=tcp://0.0.0.0:5674"

wsrep_certify_nonPK=1

wsrep_convert_LOCK_to_trx=0

wsrep_auto_increment_control=1

wsrep_drupal_282555_workaround=0

wsrep_causal_reads=0

wsrep_sst_method=rsync

# Must have and Performance values

binlog_format=ROW

default-storage-engine=innodb

sync_binlog=0

innodb_flush_log_at_trx_commit=0

innodb_doublewrite=0

innodb_autoinc_lock_mode=2

innodb_locks_unsafe_for_binlog=1

query_cache_size=0

query_cache_type=0

[mysqld2]

bind-address = 0.0.0.0

socket = /mysql/customer02/socket/customer02.pid

port = 3308

pid-file = /mysql/customer02/customer02.pid

datadir = /mysql/customer02/data

user = customer02

tmpdir = /mysql/customer02/tmp

log-bin = /mysql/customer02/binlog/binlog

log-bin-index = /mysql/customer02/binlog/binlog.index

log-error = /mysql/customer02/log/mysql.err

general_log_file = /mysql/customer02/log/mysql.log

general_log = 1

wsrep_provider=/usr/lib/galera/libgalera_smm.so

wsrep_cluster_name="my_wsrep_cluster"

wsrep_cluster_address="gcomm://0.0.0.0:5675"

wsrep_provider_options="gmcast.listen_addr=tcp://0.0.0.0:5675"

wsrep_certify_nonPK=1

wsrep_convert_LOCK_to_trx=0

wsrep_auto_increment_control=1

wsrep_drupal_282555_workaround=0

wsrep_causal_reads=0

wsrep_sst_method=rsync

# Must have and Performance values

binlog_format=ROW

default-storage-engine=innodb

sync_binlog=0

innodb_flush_log_at_trx_commit=0

innodb_doublewrite=0

innodb_autoinc_lock_mode=2

innodb_locks_unsafe_for_binlog=1

query_cache_size=0

query_cache_type=0

R The IP address of this node is 10.0.0.1.

Finally start your instances, without any argument the script will run every instance one by one:

$ sudo mysqld_multi start |

Don’t worry, if everything is ok, you won’t have any output, you can show the status of your instance with this command:

$ sudo mysqld_multi report |

You can also check the process:

$ sudo ps aux | grep mysqld |

Grant the mysql_super_user user with some options in order to able the mysqld_multi script to manipulate each instances:

$ sudo echo "GRANT SHUTDOWN ON *.* TO 'mysql_super_user'@'localhost' IDENTIFIED BY 'mysql_super_user_secure_password'" | mysql --socket=/mysql/customer01/socket/customer01.pid |

II.4. Server 02

Here only the Galera configuration changes, so :

[mysqld_multi]

mysqld = /usr/sbin/mysqld

mysqladmin = /usr/bin/mysqladmin

user = mysql_super_user

password = mysql_super_user_secure_password

log = /mysql/mysqld_multi/mysqld_multi.log

[mysqld1]

bind-address = 0.0.0.0

socket = /mysql/customer01/socket/customer01.pid

port = 3307

pid-file = /mysql/customer01/customer01.pid

datadir = /mysql/customer01/data

user = customer01

tmpdir = /mysql/customer01/tmp

log-bin = /mysql/customer01/binlog/binlog

log-bin-index = /mysql/customer01/binlog/binlog.index

log-error = /mysql/customer01/log/mysql.err

general_log_file = /mysql/customer01/log/mysql.log

general_log = 1

wsrep_provider=/usr/lib/galera/libgalera_smm.so

wsrep_cluster_name="my_wsrep_cluster"

wsrep_cluster_address="gcomm://10.0.0.1:5674"

wsrep_provider_options="gmcast.listen_addr=tcp://0.0.0.0:5674"

wsrep_certify_nonPK=1

wsrep_convert_LOCK_to_trx=0

wsrep_auto_increment_control=1

wsrep_drupal_282555_workaround=0

wsrep_causal_reads=0

wsrep_sst_method=rsync

# Must have and Performance values

binlog_format=ROW

default-storage-engine=innodb

sync_binlog=0

innodb_flush_log_at_trx_commit=0

innodb_doublewrite=0

innodb_autoinc_lock_mode=2

innodb_locks_unsafe_for_binlog=1

query_cache_size=0

query_cache_type=0

[mysqld2]

bind-address = 0.0.0.0

socket = /mysql/customer02/socket/customer02.pid

port = 3308

pid-file = /mysql/customer02/customer02.pid

datadir = /mysql/customer02/data

user = customer02

tmpdir = /mysql/customer02/tmp

log-bin = /mysql/customer02/binlog/binlog

log-bin-index = /mysql/customer02/binlog/binlog.index

log-error = /mysql/customer02/log/mysql.err

general_log_file = /mysql/customer02/log/mysql.log

general_log = 1

wsrep_provider=/usr/lib/galera/libgalera_smm.so

wsrep_cluster_name="my_wsrep_cluster"

wsrep_cluster_address="gcomm://10.0.0.1:5675"

wsrep_provider_options="gmcast.listen_addr=tcp://0.0.0.0:5675"

wsrep_certify_nonPK=1

wsrep_convert_LOCK_to_trx=0

wsrep_auto_increment_control=1

wsrep_drupal_282555_workaround=0

wsrep_causal_reads=0

wsrep_sst_method=rsync

# Must have and Performance values

binlog_format=ROW

default-storage-engine=innodb

sync_binlog=0

innodb_flush_log_at_trx_commit=0

innodb_doublewrite=0

innodb_autoinc_lock_mode=2

innodb_locks_unsafe_for_binlog=1

query_cache_size=0

query_cache_type=0

R The IP address of this node is 10.0.0.2.

II.5. Final!

Stop every instance on the server 1:

$ sudo mysqld_multi stop |

Finally change Galera’s options with the IP of the server 2 in order to enable the cross replication:

- for the instance 1:

wsrep_cluster_address="gcomm://10.0.0.2:5674" - for the instance 2:

wsrep_cluster_address="gcomm://10.0.0.2:5675"

Finally start MySQL again on the server 1:

$ sudo mysqld_multi start |

II.6. Garbd

Last but not least, configure your arbitrator like so:

$ garbd -a gcomm://10.0.0.1:5674 -g my_wsrep_cluster -l /tmp/1.out -d |

R Remenber that garbd is always running on a different and reliable machine outside your cluster.

Et voilà!

III. Bonus script

As always I wrote a little script to check your Galera accross all your socket. Put this script in /usr/bin/, pass a parameter to the script while execute it:

|

Ideally this script should be merged with the mysqlq_multi perl script.

You should already have noticied that the process of adding a new instances is fearly easy to script. Happy multi mysql with Galera!

Comments